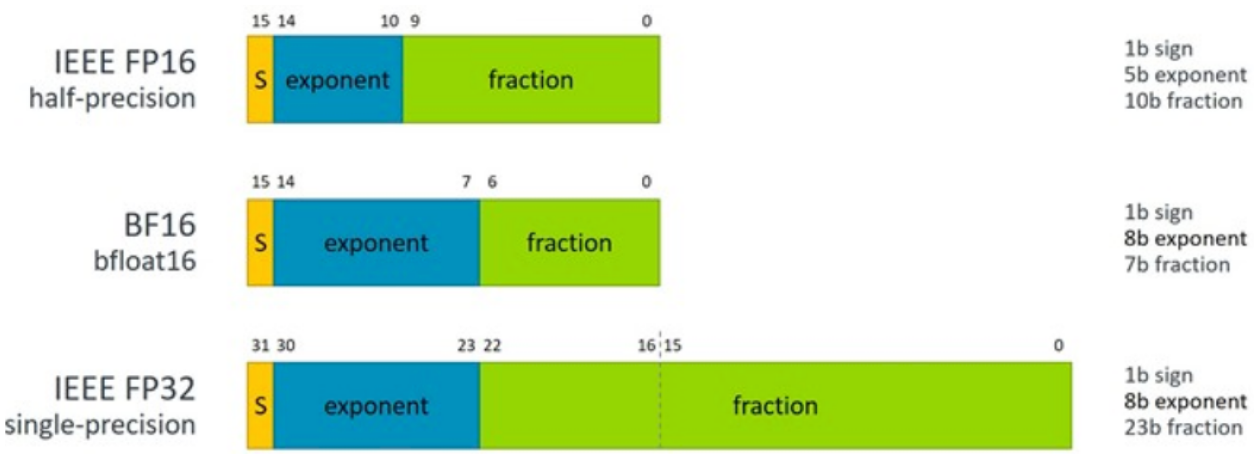

PyTorch on Twitter: "FP16 is only supported in CUDA, BF16 has support on newer CPUs and TPUs Calling .half() on your network and tensors explicitly casts them to FP16, but not all

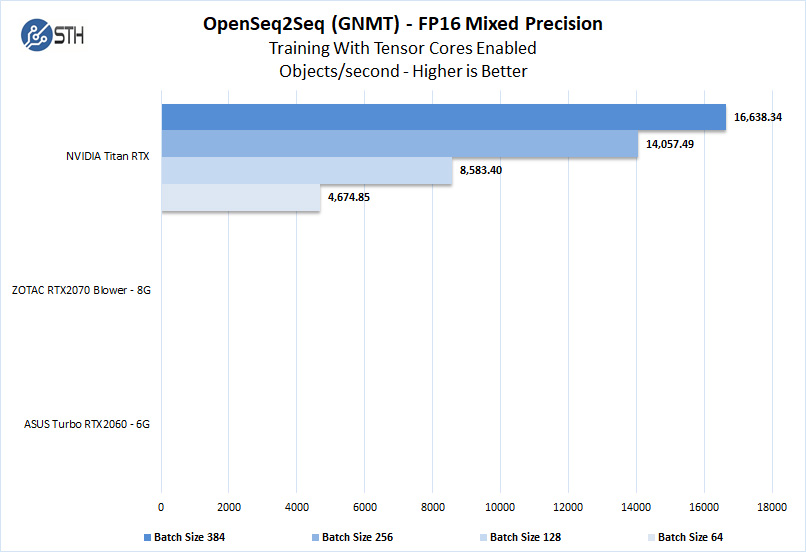

Revisiting Volta: How to Accelerate Deep Learning - The NVIDIA Titan V Deep Learning Deep Dive: It's All About The Tensor Cores

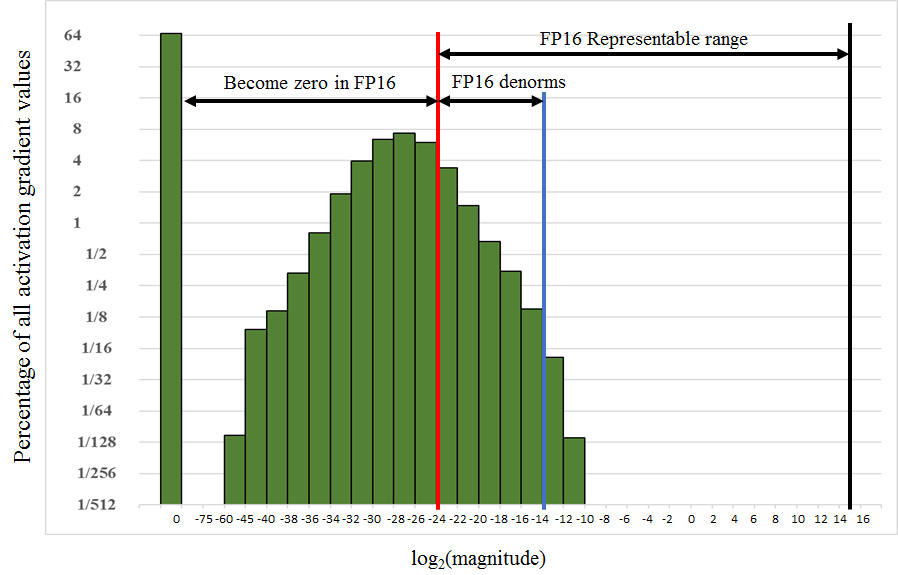

![RFC][Relay] FP32 -> FP16 Model Support - pre-RFC - Apache TVM Discuss RFC][Relay] FP32 -> FP16 Model Support - pre-RFC - Apache TVM Discuss](https://discuss.tvm.apache.org/uploads/default/original/2X/4/450bd810eb5b388a3dc4864b1bdd5f78cd01d2dc.png)